Mastering Sorting Algorithms in Python: A Comprehensive Guide for Efficient Data Sorting

Introduction: Sorting algorithms are fundamental tools in computer science for arranging data in a specified order, such as ascending or descending. Python, as a versatile and powerful programming language, offers a wide range of sorting algorithms, each with its own advantages, complexities, and performance characteristics. In this comprehensive guide, we will explore the principles, techniques, and implementations of various sorting algorithms in Python, empowering developers to choose and implement the most suitable algorithm for their specific requirements.

- Understanding Sorting Algorithms: Sorting algorithms are algorithms that rearrange elements of a collection in a specified order, typically either ascending or descending. Sorting algorithms can be categorized based on their time complexity, space complexity, stability, and adaptability. Common sorting algorithms include bubble sort, selection sort, insertion sort, merge sort, quick sort, heap sort, and radix sort, each offering different trade-offs in terms of performance and efficiency.

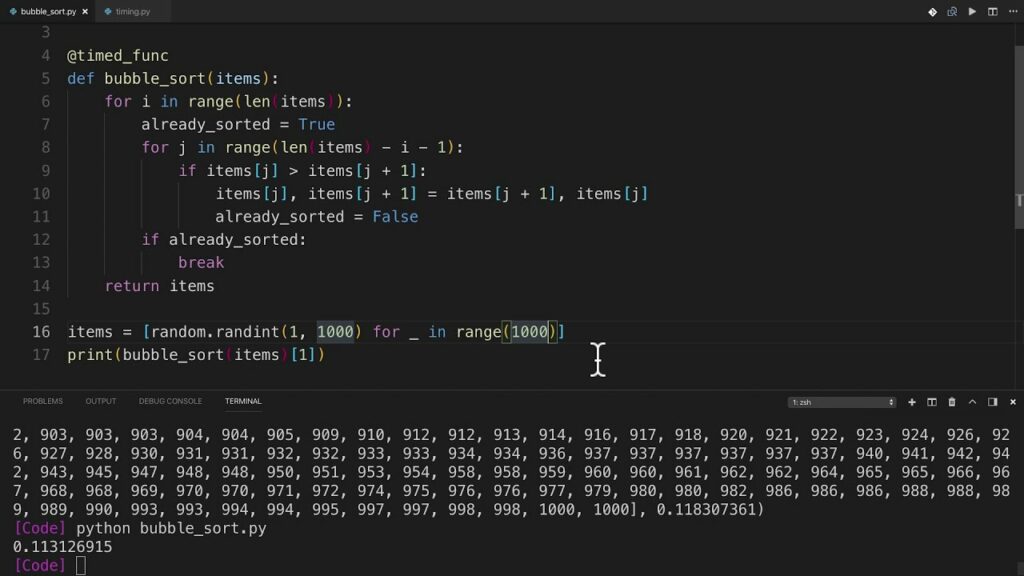

- Bubble Sort: Bubble sort is a simple comparison-based sorting algorithm that repeatedly steps through the list, compares adjacent elements, and swaps them if they are in the wrong order. Bubble sort has a time complexity of O(n^2) in the worst and average cases but can be optimized with early termination if the list is already sorted. While bubble sort is straightforward to implement, it is generally inefficient for large datasets due to its quadratic time complexity.

- Selection Sort: Selection sort is another simple comparison-based sorting algorithm that divides the input list into two parts: a sorted sublist and an unsorted sublist. The algorithm repeatedly selects the smallest (or largest) element from the unsorted sublist and swaps it with the first element of the unsorted sublist. Selection sort has a time complexity of O(n^2) in all cases but is generally more efficient than bubble sort due to fewer comparisons and swaps.

- Insertion Sort: Insertion sort is a simple comparison-based sorting algorithm that builds the final sorted list one element at a time by iteratively inserting each element into its correct position in the sorted sublist. Insertion sort is well-suited for small datasets or partially sorted lists and has a time complexity of O(n^2) in the worst and average cases but can achieve linear time complexity O(n) for nearly sorted lists.

- Merge Sort: Merge sort is a comparison-based sorting algorithm that divides the input list into smaller sublists, recursively sorts each sublist, and then merges the sorted sublists to produce the final sorted list. Merge sort is a stable, divide-and-conquer algorithm with a time complexity of O(n log n) in all cases, making it efficient for sorting large datasets. Merge sort is also well-suited for parallelization and external sorting due to its recursive nature.

- Quick Sort: Quick sort is a comparison-based sorting algorithm that divides the input list into two sublists, partitions the elements around a pivot element, recursively sorts each sublist, and then concatenates the sorted sublists to produce the final sorted list. Quick sort is a fast, divide-and-conquer algorithm with an average time complexity of O(n log n) and a worst-case time complexity of O(n^2), depending on the choice of pivot and partitioning strategy.

- Heap Sort: Heap sort is a comparison-based sorting algorithm that builds a binary heap from the input list, repeatedly extracts the maximum (or minimum) element from the heap and restores the heap property, until the heap is empty. Heap sort has a time complexity of O(n log n) in all cases and is particularly efficient for sorting large datasets due to its in-place nature and lack of recursive calls. However, heap sort is not stable and requires additional space for the heap structure.

- Radix Sort: Radix sort is a non-comparison-based sorting algorithm that sorts elements by their individual digits or radix positions. Radix sort iteratively sorts elements by their least significant digit to their most significant digit, using counting sort or bucket sort as subroutines. Radix sort has a time complexity of O(nk) in all cases, where k is the number of digits or radix positions, making it efficient for sorting integer or string data with a bounded range.

- Implementing Sorting Algorithms in Python: To implement sorting algorithms in Python, developers can write custom functions or use built-in functions and libraries such as

sorted()orlist.sort(). Sorting algorithms can be implemented using iterative or recursive approaches, with appropriate termination conditions and base cases. Developers should consider factors such as time complexity, space complexity, stability, adaptability, and ease of implementation when choosing and implementing sorting algorithms in Python. - Choosing the Right Sorting Algorithm: Choosing the right sorting algorithm depends on various factors such as the size of the dataset, the distribution of data, the stability requirement, the available memory, and the desired performance characteristics. Developers should analyze the requirements and constraints of their specific use case and select the most suitable sorting algorithm accordingly. Benchmarking and profiling tools can help evaluate the performance and efficiency of different sorting algorithms in Python.

Conclusion: Sorting algorithms play a crucial role in computer science and data processing, enabling efficient organization and manipulation of data in various applications. By mastering the principles, techniques, and implementations of sorting algorithms in Python, developers can write efficient, scalable, and maintainable code for sorting datasets of any size or complexity. Whether sorting integers, strings, arrays, or custom data structures, Python offers a rich ecosystem of sorting algorithms and libraries to meet the diverse needs of developers and applications.